Our main interaction design tool is a 3D animation application. Using this kind of software allows us to place together all five expression layers into a single timeline. In this way, the interaction is played out in a formulaic script, similar to writing a film or live theater piece. Once we began working within this methodology, we found ourselves working on every interaction unit like we were animators working on an endless movie.

Our first step is to develop the script: a script that describes the user’s general interactions and flows. Our second step is to draw a rough storyboard, which helps us count the required design assets from our toolkit for each scene. Usually, at this stage, we define the asset output into a cohesive expression completely based on the verbal sentence that ElliQ will say. After watching a 3D animated version of the scene, we will refine the expression and send it directly to the cloud – where all of ElliQ’s behaviors are stored.

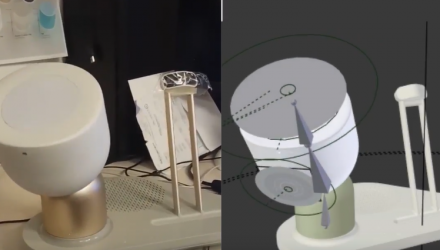

It is important to note that there is a clear difference between the 3D animation design and the final robotic interaction. While the animator works from the point of view of an artificial camera, our designers cannot predict how and in what position we are going to find the person in the room. ElliQ is therefore programmed to behave more like a live actor, identifying the person in the room and adjusting her behavioral expressions based on context. The result is a unique interaction, every time, for every user – guided by design but controlled by you. This is the future of robotic interactions, and we’re excited to be paving the way.

For more trends in robotics and artificial intelligence, visit the Robotics & AI Channel.