Last quarter, I wrote a column that was critical of the idea of using AI for financial decision-making. In my conclusion, I encouraged readers — especially advisors, who are busy task switchers — to “focus less on the whizbang tech and more on where in your day-to-day workflow you could use a little automation.” In other words, ignore the hype around AI and focus on the reality…

After the article published, I got some great feedback from advisors saying that they were using ChatGPT here and there. For the most part, however, the overwhelming reaction to 2023’s AI hype-a-palooza has been “Yawn, another bubble.” Given that something like 20% of Nvidia’s market capitalization is now probably due to AI hype, it’s a reasonable reaction.

Is it all just hype, though? Some brilliant thinkers like Berkeley’s Professor Stuart Russell continue to make the rounds urging us to slow down about AI. (Prediction: The world won’t listen). At the same time, popular headlines continue to encourage drama and fear-mongering, like this one from The Sun about AI gaining consciousness:

Honestly, it’s all a distraction. I’m a big believer in sticking to what’s right in front of us.

And what’s right in front of us has gotten shockingly useful.

Beyond ChatGPT: AI Tools in The Real World

Much of the initial AI hype this year focused on ChatGPT-4. This is only one model from one player in a very large and very diverse space of AI research. Even as Sam Altman, OpenAI’s CEO, continues his world tour to explain the firm’s products and vision, some very smart people are raising questions about what’s going on under OpenAI’s hood, and suggesting his endgame is a lot bigger.

That said, logging into OpenAI once in a while doesn’t actually improve anyone’s daily life, right now. For perhaps this reason alone, it’s fair to be skeptical of the headlines. But there actually are folks making huge strides in getting real, useful, high-value work done in mainstream business.

For example, this week researchers at Wharton, Harvard, MIT and the Boston Consulting Group published a paper with the roll-off-the-tongue title of, “Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality.”

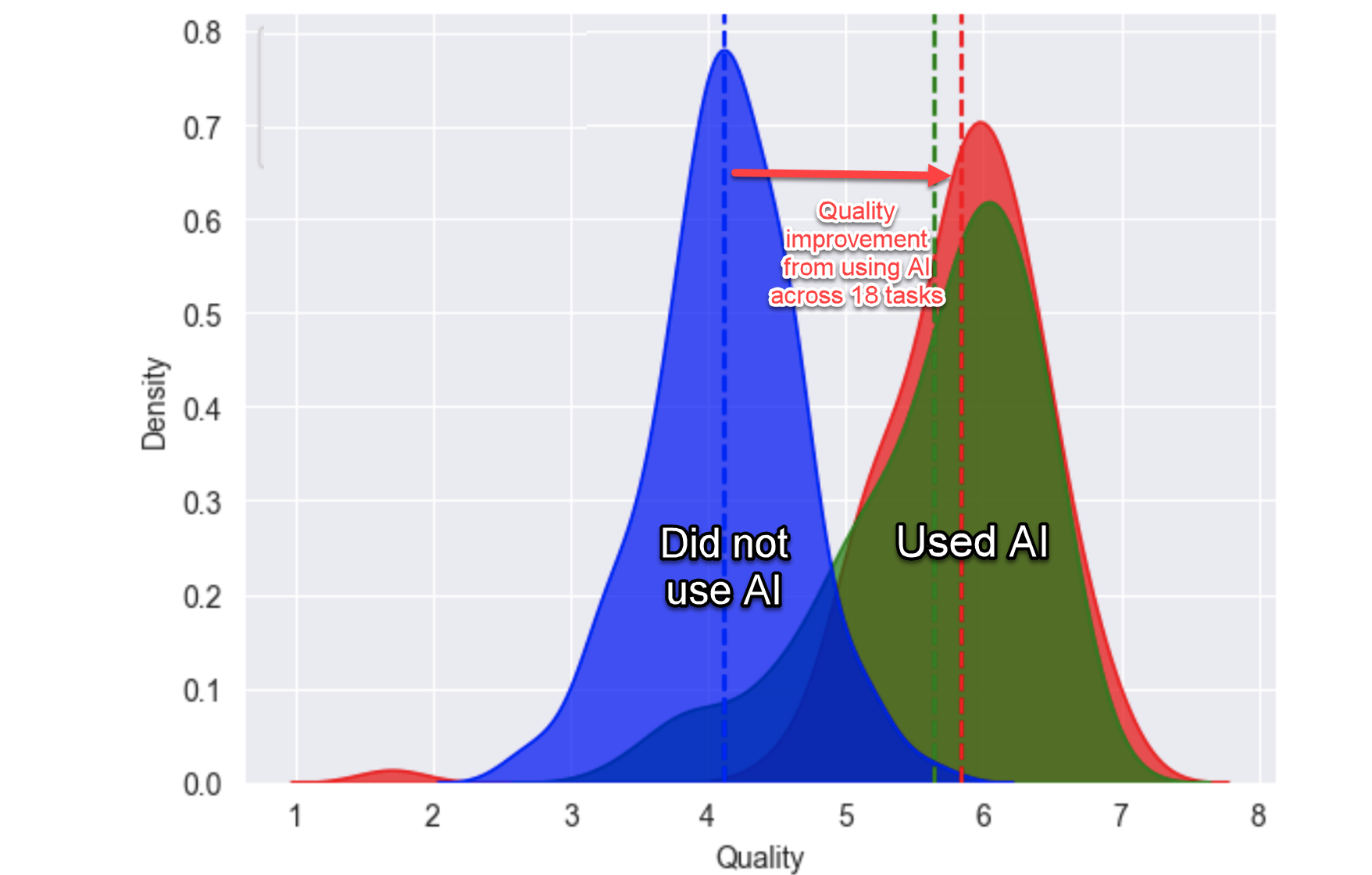

The researchers took 18 “tasks” representative of the kinds of work management consultants do. Think classic business school case studies, like, “Prepare a 700-word competitive analysis note for the CEO of a widget company on [X]” or “Generate 10 alternative branding proposals based on [Y].” Then they asked 758 BCG consultants to complete the tasks, some of whom used AI to assist. Afterward, the researchers ran a blind analysis of the complete body of work. The results were staggeringly clear:

The results are clear: The consultants who used AI had higher quality work.

Consultants who were allowed to use AI substantially and consistently outperformed their unaided peers. (The green group used ChatGPT-4 with no help, while orange received a generic prompting guide along with ChatGPT-4 access.) The results were so compelling that one of the researchers to published a “Pop Sci summary” with the subheading: “I think we have an answer on whether AIs will reshape work…“ This isn’t consultants “cheating” and not doing the work. These are trained, highly paid professionals doing better, faster work with an AI assistant.

How I Used Perplexity.AI to Get Smart Again Fast

Anecdotally, we’re seeing more and more evidence of these same results every day, where real AI tools are solving real problems. Here’s a real-world example from my own workflow.

Many investors are unaware that the U.S. is headed towards T+1 settlement. This is the kind of nerdy stuff I field phone calls about all the time. Well, just this week, I got a phone call about the issue. Yet I hadn’t honestly put much thought into the issue since sometime last year, when the laws changed.

My normal process to re-familiarize myself with such a deep topic I once had deep, current expertise in would include one or more of the following steps:

- Check my Notion, which is a poorly organized, loosely categorized hodge-podge of thousands of documents, ideas, articles, and such I’ve collected over years;

- Scroll through a giant folder system that I keep called “ETF Research,” which contains decades of saved PDFs;

- Spend several minutes on the internet re-familiarizing myself with the state of play by going to a bunch of trusted sources, usually through google.

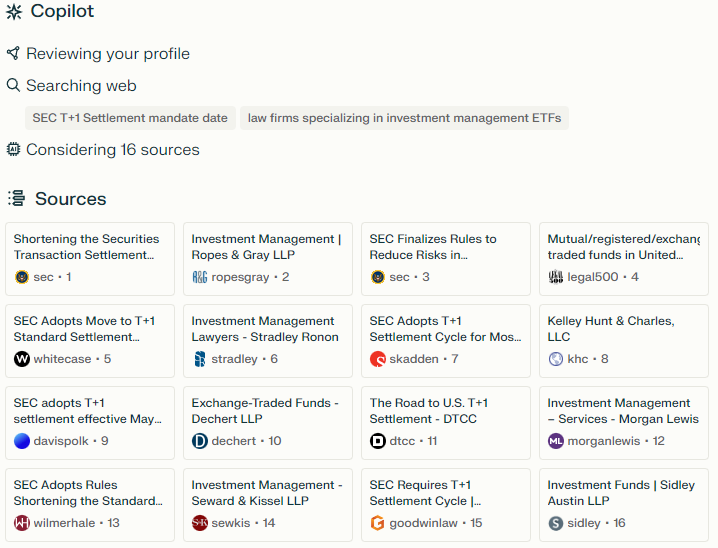

However, recently I’ve begun using Perplexity.ai as a starting point. Perplexity is a subscription-based search tool bolted into one of several large language models, such as ChatGPT-4. To use, you basically just talk to it. In this case, I went to my now-always-open Perplexity tab and typed, “When will the SEC mandate T+1 Settlement in the United States. Please cite only law firms that specialize in investment management and ETFs.”

Perplexity then replied (instantly) with:

Perplexity.AI shows the sources it uses. It also allows you to tailor which ones you want.

Source: Perplexity.AI

A few things to note: First, it checks my profile, a page on which I’ve written a few hundred words about who I am, what I like and what I do, in plain language. Perplexity knows my favorite movie is The Princess Bride, that I like indie music, and so on. These details seem to subtly inform its responses — in a good way.

Second, you can see the two internet searches it ran, which resulted in 16 potential sources, all of which are displayed in numerical order. This is critical, because you immediately can see whether the model interpreted my instructions correctly. (In this case, it did; it looked through content from big law firms, not random Reddit threads). You can limit Perplexity’s search in a number of ways; you can tell it to only look at academic research, Reddit, YouTube, or known sources of truth, like WolframAlpha.

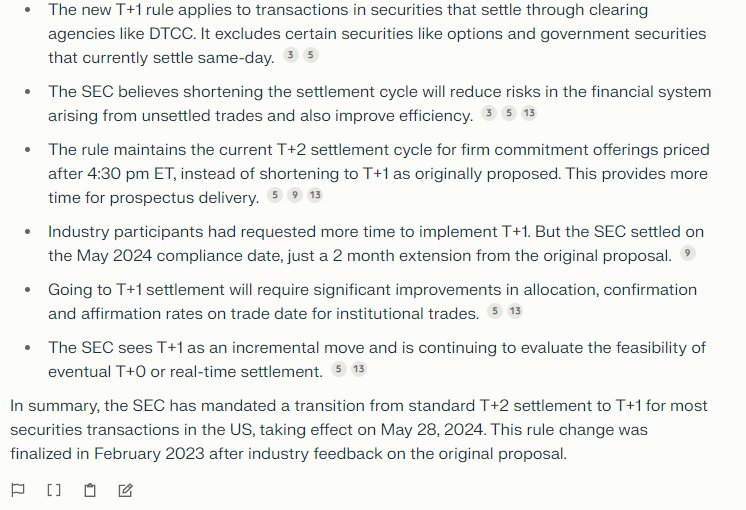

The answer, which took 10 seconds or so to generate, was as follows:

The output Perplexity.AI generated for me on T+1 settlement was both accurate and fast.

Source: Perplexity.AI

Perplexity directly answered my question in the first sentence (the deadline is May 28, 2024). The “key points” are, frankly, pretty spot on. And each key statement is supported by a number of sources (the gray circles), which you can click to read. If any were wrong, you can click on the [ ] button to pick and choose the sources you trust, then try again.

Note that there are no deep or new insights in this reply. I haven’t asked the model to make something up. Instead, I asked it to summarize tens of thousands of words of internet-based content succinctly. Which it did, quickly and accurately. Importantly, the system doesn’t “know” anything. It just used the power of the LLM to read, summarize, and interpret very quickly. If the search had returned a bunch of garbage websites — which we all see every day when we use Google — the summary would have been garbage, and I would have rewritten the query or killed off some of the bad sources.

AI Will Disrupt SEO-Driven Information Ecosystems

In my simple example above, AI didn’t “do my work for me.” I still had to know what to ask, how to ask it, and how to know if the answers were decent. And of course, my main goal here was to get to primary sources to make sure my understanding of the topic remains relevant and timely, not to copy-paste a response somewhere. But it did save me time: about five minutes, maybe ten. That time alone is enough to make this interesting for me. But the source management and subsequent suggested follow-up directions from Perplexity make it an incredible rabbit-hole accelerator. Topics I once might have had to spend an hour finding good primary sources on, I can now delve into in a matter of minutes.

For the last week, I’ve used Perplexity.ai entirely instead of Google. Think about that for a minute. When was the last time you worked at a computer for a week without interacting directly with a search engine once?

What does this mean for Google and its SEO+Add based model? Change. Competition. A shifting competitive landscape to which Bard (pretty good!) is their response.

What does it mean for the 16 law firms whose content I never read, whose revenue and content model I did not support? None of those websites got my cookie, my identity, not even my attention. The most useful sources perhaps I jump into, anyone at the periphery? I’ll never engage with. And keep in mind: this tool is still very much in beta. It’s only going to get more effective from here.

How does this not massively disrupt the entire SEO-based information and marketing ecosystem?

AI & Business: Too Early, Too Late, And Already Happening

Tomorrow, I’ll sit down with Alai’s Andrew Smith Lewis, one of the smartest thinkers on how we learn and grow I’ve ever had the pleasure of talking to — and the person who turned me on to the current state of Perplexity.ai. He’s at the forefront of figuring out how real world knowledge systems — like, say, finance and education — can leverage AI today; and Zeno Mercer, VettaFi’s Senior Research Analyst and expert on all things robotics and AI. On our webcast, “AI & Business: Too Early, Too Late, And Already Happening,” we’re going to discuss which businesses are this ripe for AI disruption, where the tools are headed, and what investors should do about it.

I hope you’ll join us.

“AI & Business: Too Early, Too Late, And Already Happening,” a webcast featuring VettaFi’s Zeno Mercer and Dave Nadig, as well as Alai’s Andrew Smith Lewis, will take place on Friday, September 22 at 2 PM ET. Registration is still open and available at the link above.

For more news, information, and analysis, visit the Disruptive Technology Channel.