The last month in the development of AI has been a heck of a decade. It’s nearly inconceivable you haven’t heard of ChatGPT, Midjourney, Stable Diffusion, BARD, or any of the now hundreds if not thousands of derivative projects. It would also be absolutely understandable if you wanted to dismiss all of this as just the latest Dogecoin distraction from the very real world of problems we have right now.

Here’s what I’m not going to do: I won’t try to convince you that ChatGPT is cool or show you a weird conversation I had with it. I won’t have it help me write this. I will certainly not pretend I’m an expert. However, if you’ve been living under a rock, here are some juicy things to pique your interest:

- Someone says ChatGPT saved his dog.

- Folks are letting ChatGPT run (small) businesses.

- It’s trivial making D&D character art.

- Folks are having online dates for them

- … or writing entire applications.

In the creative space, there’s no shortage of things to be amazed and terrified about, including the faux-Pope’s faux-puffy jacket and fake Kanye doing other people’s songs.

But here’s the thing: I have written (probably too much) about how I see all you advisors as kind of exploratory heroes whose literal job is to keep your eyes open on behalf of your clients. You follow the pheromone trails to new resources and new ideas to avoid investing in buggy whips and FTX by accident or perhaps finding new opportunities others might be missing.

This isn’t a “head in the sand” moment. I think this is a “learn fast” moment.

I’m No Expert, but Neither Is ChatGPT

Over the past few months, I’ve gone pretty far into the AI rabbit hole, less to explore what I could do with a cool new tool (although I’ve done plenty of that) and more to try and understand what’s going on under the hood. There are lots of great videos explaining the fundamentals of large language models (and I am absolutely not some instant expert here), but here’s how I’ve dumbed it down enough so I can remember it and explain it:

At its core, ChatGPT does a single thing: It predicts the next word. The purest use case is to say something like: “Finish this sentence:”

There are a bunch of other things going on here, but at the core, the model determines, in order, each of the most likely words to follow “is” in the above fragment. It does this by using an enormously complex and yet shockingly elegant set of functions that parse:

- What’s statistically the most likely word to follow “is” based on reading everything ever written?

- Once it’s decided on “a,” it starts all over and finds statistics about what should follow “a.”

It develops those statistics based on several sets of work done long before I asked the question. First, researchers trained a statistical model on essentially every word humans have ever digitized. But if it stopped there, you’d get mostly the garbage we’ve seen out of chat bots to date.

What really changed was the development of the “transformer.” A “transformer” in this context is a kind of architecture (outlined in an incredible paper by Google engineers in 2017) for manipulating statistical prediction data. The key innovation or insight is the idea that not only should the system go look at all of human writing to discover that “blue” is a good word to put after “the sky is,” but to tweak that prediction based on patterns present both in the question and in the output that the model has generated to that point (and perhaps a raft of other tweaks for things like safety or copyright). These mechanisms for fine-tuning the model’s output (and training) in real time in response to both the input and the developing output are called “attention” or “self-attention.”

By running lots and lots of data through lots and lots of models, you train the system to understand when a word or even a comma is relevant to a sentence being created. For instance, there’s the classic example of using the comma correctly in the sentence “Let’s eat, Grandma,” rather than suggesting her murder with “Let’s eat Grandma.” Somewhere along the way, the model analyzed the comma’s relationship to the other words in the sentence and now will reliably use commas the same way a decent human writer would.

Relevance Realization All the Way Down

If that all sounds just slightly familiar to regular readers, it should. What I’ve just described is machine-coded Relevance Realization. Modern AI models — those built on academic work not even six years old — train themselves on what is important by observing what humans have thought is essential in the training data, like how a child learns you can grab a cup by the handle by watching their parents do it, then trying it out, and taking the feedback that it works.

In cognitive science, Relevance Realization is the set of processes that help you determine what is important out of all the inputs facing you. When I am writing the sentence “Let’s eat, Grandma,” I have trained myself to recognize that comma placement is important. When I write the sentence “One day I was walking down the street slowly,” I pay a lot less attention to whether, for example, I should say, “I was walking down the street, slowly” because my internal Relevance Realization model has been trained that moving the comma will change the meaning far less in the second case.

I find it curious that the breakthrough that makes AI seem so believably human is encoding Relevance Realization. I also find it comforting and concerning.

I find it comforting because, honestly, I’m on a crusade to understand things better. Reframing my own learning and thinking around Relevance Realization has had a profound and positive impact. We discussed this a fair bit on stage in Florida. (Feel free to catch up at 85BB65, where I archived it all.) At a very human level, focusing on what’s important is a superpower. You get better at it (with apologies to John Vervaeke’s literally hundreds of hours of lectures on the topic) by learning how to pay attention better and striving to become a better critical thinker.

There are wells of practices to dive into, from meditation to religions to brain-training apps. All of this is entirely trainable. So this baseline skill fills me with hope, because where better to lean in than teaching systems to understand relevance?

My concerns aren‘t the ones other folks seem to be most worried about. I might as well lay out my rebuttals here:

I am not that worried about the impact on creators. Yes, it’s absolutely the case that a class of jobs will change and be challenged. Some folks will lose their gigs, but so did portrait painters after the invention of the camera. When I was in college, it was controversial when a friend did a gallery opening of art created entirely on a first-generation Mac with a dot-matrix printer. People protested it wasn’t art because a computer had generated the final image.

Do any of us think art “only counts” when it’s only ever seen human hands? What music, visual art, film, or even novel would survive that scrutiny in 2023? Believe me, I understand the fear. I have been paid to write in some capacity my entire life. I am confident that when technology makes everything anonymous, people will suddenly care a lot more about who is writing, drawing, or playing a song. See also: vinyl sales, Substacks, and non-generative NFT art. To hit an AI-nerd favorite, essentially nobody can beat a computer at chess, and yet chess has never been more popular, both as an activity and as a spectator sport.

No prompt is going to generate a Morgan Housel Psychology of Money bestseller, because Morgan Housel writes from and about the human experience. You can ask it cookie cutter financial planning questions, and it will parrot back a plausible blog-post bullet point. I am not worried. I am excited.

I am also not losing sleep over a rogue AI becoming SkyNet for a variety of reasons. Much smarter people than I can argue about it, but the reality is that my opinion doesn’t matter. I see the question as a bit of a Pascal’s Wager: Either AI is an existential threat because it will, at some point, develop its own volition, in which case the last thing it would do is tell us — or it’s not an existential threat of any kind near-term because we can’t even agree on volition and free will in human beings. The idea that an AI will spontaneously develop self-preservation, seek-energy gradients, and go hostile just seems unrealistic.

Not only does a large language model (LLM) not “do” anything, it doesn’t actually “know” anything either. Consider the falderal this weekend about ChatGPT “teaching itself chemistry.”

When you ask ChatGPT a question about sodium and water, it doesn’t “know” that adding them together is bad. It simply starts writing sentences and looking at every statistical connection between words it’s seen that include “sodium” and “water.” Then, it goes about probabilistically creating a response that will likely include words like “exothermic” and “explosion” because, well, how many thousands of textbooks, lab reports, and newspaper articles have covered these ideas before? It doesn’t “know” anything. It just knows the historic human connections between ideas and is good at extrapolating. Put more simply, it’s not learning how anything works, it’s only learning what humans have written down and spitting it back out as best it can.

People Perturb Everything

And that’s where I’m concerned. The potential dangers of these sharp new tools are the same dangers we face from every new tool: people. It’s not that someone is going invent an imaginary rapper to out Kanye Kanye and put him out of business, it’s that the same tech will be used for myriad deep fakes, and we’re going to be challenged to discern them. It’s not that ChatGPT will become sentient and decide to do bad things, it’s that people hell-bent on doing bad things will learn to use these tools to help.

Exactly, like, say, the internet. Or cell phones. Or drones. Or cryptography. Or even a financial technology like ETFs.

But here’s the thing: Not only are we the tool-users, but we are also the training data the tools are built on, for good or ill. ChatGPT is us, statistically. One prevailing and intriguing guess at how humans have “consciousness” is “Integrated Information Theory” or IIT, which posits that complexity is itself what creates consciousness (which, if you keep going down the rabbit hole, starts having some interesting implications for the nature of reality, but that’s Tom Morgan’s beat at Sapient).

How are we complex enough to be conscious? Well, in the argot of AI: Perhaps it’s because we’ve been training on a data set countless millennia old (encoded in our very biology), assisted by various transformer functions that allow us to have both local context (what does my model of the world look like) and in-the-moment context (what am I thinking, feeling and doing) — which turn out to be the big ideas in neuroscience right now as well.

Put another way, we’re discovering how we think pretty much in real time by trying to teach machines how to think. That’s not scary to me. It’s incredibly exciting. AI isn’t about creating better thinkers out there. It’s about creating cognitive tools for real humans. We can’t imagine what those tools will be any more than a merchant in 400 BC could imagine Microsoft Excel. This is using the computing power and storage we have available today. Just wait until we get ubiquitous quantum computing in a decade, or a year, or next week.

Keep Talking and Nobody Explodes

Sitting here this morning writing, the only thing I can think of is playing a VR game called “Keep Talking and Nobody Explodes” during the pandemic. My son sat on the couch wearing a VR helmet, staring at a janky cartoon bomb covered with switches, wires, and knobs.

“Complex wires!” my son yells.

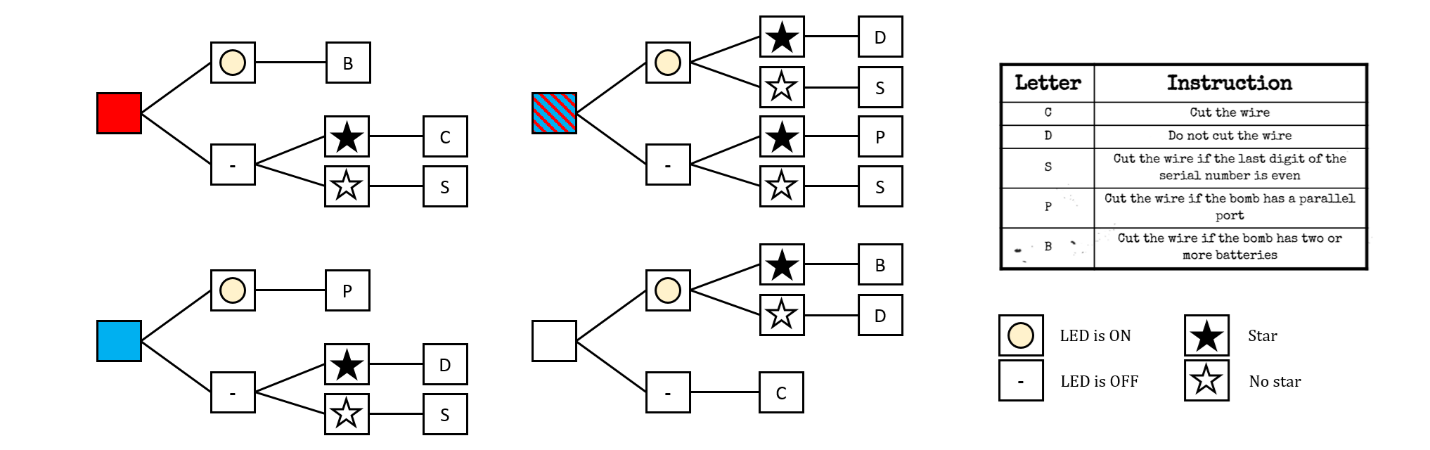

Sitting next to him, I flip open a three-ring binder. It opens to a page labeled “complex wires” with the following diagram:

“That’s me,” I say. My wife and daughter have their own binders open. “Okay, buddy, let’s take them one at a time. What color is the wire?”

“Red.”

I ask a few more questions, and he cuts the red wire.

Inside VR, the bomb explodes, as we have once again failed on this level of the game.

The premise is simple: Groups work together to solve complex problems with limited amounts of information, time, and feedback. There’s a lot of yelling.

But in the end, the name of the game is also the solution to it. Keep talking and nobody explodes.

AI, LLMs, AGI, SkyNet, chat bots, generative art: Nobody actually knows what the future holds, about anything, ever. But more than ever, it seems like sharing our experiences, explorations, victories, failures, hopes, and concerns is the answer. There is no putting any of this tech back into the box and hoping it goes away. It’s sitting here, right in your hands, right now. Use it. Talk. Listen. Learn. Iterate.

Keep talking and nobody explodes.

For more news, information, and analysis, visit VettaFi | ETF Trends.